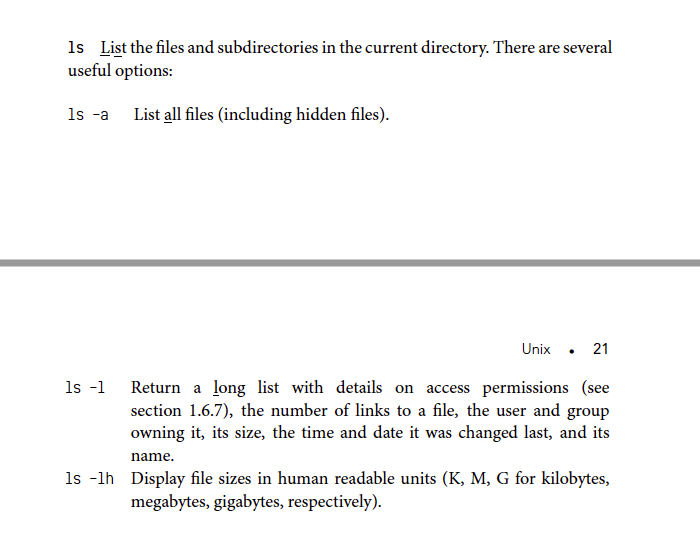

ls

ls -lh

The option -l of the command ls also reports the size of the file.

Adding the flag -h makes it “human-readable” (i.e., using K, M, instead of printing the number of bytes).

1 | (base) yuxuan@Yuxuan sandbox % ls -lh |

The size could also be calculated as

grep

1 | # To count the occurrences of a given string, use |

grepis a powerful command that finds all the lines of a file that match a given pattern

wc

wc Line, word, and byte (character) count of a file. The option returns the wc-l line count only and is a quick way to get an idea of the size of a text file

cut

cut is used to select the columns and the option d is to specify the delimiter

The additional option -f let us to extract specific column 1 (-f 1-4)

1 | grep '>' my_file.fasta | cut -d ',' -f 4 | head -n 2 |

sort

1 | # Now we want to sort according to the number of reads. However, the number of reads is part of a more complex string. We can use -t '=' to split according to the = sign, and then take the second column (-k 2) to sort numerically (-n) |

tail

1 | # Display last two lines of the file |

tr

tr: is used to substitute characters using tr

Substitute all characters a with b:

1 | $ echo "aaaabbb" | tr "a" "b" |

Substitute every digit in the range 1 through 5 with 0:

1 | $ echo "123456789" | tr 1-5 0 |

Substitute lowercase letters with uppercase ones:

1 | $ echo "ACtGGcAaTT" | tr actg ACTG |

We obtain the same result by using bracketed expressions that provide a predefined set of characters. Here, we use the set of all lowercase letters [:lower:] and translate into uppercase letters [:upper:]:

1 | $ echo "ACtGGcAaTT" | tr [:lower:] [:upper:] |

We can also indicate ranges of characters to substitute:

1 | $ echo "aabbccddee" | tr a-c 1-3 |

Delete all occurrences of a:

1 | $ echo "aaaaabbbb" | tr -d a |

“Squeeze” all consecutive occurrences of a:

1 | $ echo "aaaaabbbb" | tr -s a |

Bash_script_hw1

http://computingskillsforbiologists.com/downloads/exercises/#unix

1

1 | # 2. Write a script taking as input the file name and the ID of the individual, and returning the number of records for that ID. |

2

1 | ################################################### |

3

1 |

|

1 | # 2) Write a script that prints the number of rows and columns for each network |

4

1 | ################################################### |

- Post title:bash command combo

- Post author:Yuxuan Wu

- Create time:2022-01-20 19:38:03

- Post link:yuxuanwu17.github.io2022/01/20/2022-01-20-bash-command-combo/

- Copyright Notice:All articles in this blog are licensed under BY-NC-SA unless stating additionally.